Backtracking is a general algorithm for finding all solutions to some computational problem, that incrementally builds candidates to the solutions, and abandons each partial candidate c ("backtracks") as soon as it determines that c cannot possibly be completed to a valid solution.

Backtracking can be applied only for problems which admit the concept of a "partial candidate solution" and a relatively quick test of whether it can possibly be completed to a valid solution. It is useless, for example, for locating a given value in an unordered table. When it is applicable, however, backtracking is often much faster than brute force enumeration of all complete candidates, since it can eliminate a large number of candidates with a single test.

- It is one of the most general

algorithm design techniques.

- Many problems which deal with

searching for a set of solutions or for a optimal solution satisfying some

constraints can be solved using the backtracking formulation.

- To apply backtracking method, tne

desired solution must be expressible as an n-tuple (x1…xn) where xi is

chosen from some finite set Si.

- The problem is to find a vector,

which maximizes or minimizes a criterion function P(x1….xn).

- The major advantage of this method

is, once we know that a partial vector (x1,…xi) will not lead to an optimal solution

that (mi+1………..mn) possible test vectors may be

ignored entirely.

- Many problems solved using

backtracking require that all the solutions satisfy a complex set of

constraints.

- These constraints are classified

as:

- Explicit constraints.

- Implicit constraints.

1)

Explicit constraints

Explicit

constraints are rules that restrict each Xi to take values only from a given

set.

- All tupules that satisfy the

explicit constraint define a possible solution space for I.

2)

Implicit constraints:

The implicit constraint

determines which of the tuples in the solution space I can actually satisfy the

criterion functions.

Algorithm:

Algorithm IBacktracking

(n)

// This schema

describes the backtracking procedure .All solutions are generated in X[1:n]

//and printed as

soon as they are determined.

{

k=1;

While (k 0) do

0) do

{

if (there remains all untried

X[k]  T (X[1],[2],…..X[k-1])

and Bk (X[1],…..X[k])) is true ) then

T (X[1],[2],…..X[k-1])

and Bk (X[1],…..X[k])) is true ) then

{

if(X[1],……X[k] )is the path to the

answer node)

Then write(X[1:k]);

k=k+1; //consider the next step.

}

else k=k-1; //consider backtracking

to the previous set.

}

}

- All solutions are generated in

X[1:n] and printed as soon as they are determined.

- T(X[1]…..X[k-1]) is all possible

values of X[k] gives that X[1],……..X[k-1] have already been chosen.

- Bk(X[1]………X[k]) is a

boundary function which determines the elements of X[k] which satisfies

the implicit constraint.

- Certain problems which are solved

using backtracking method are,

1. Sum of subsets.

2. Graph coloring.

3. Hamiltonian cycle.

4. N-Queens problem.

STATE-SPACE TREE

It is convenient to implement this kind of processing by constructing a tree of choices being

made, called the state-space tree. Its root represents an initial state before the search for a

solution begins. The nodes of the first level in the tree represent the choices made for the

first component of a solution; the nodes of the second level represent the choices for the

second component, and so on.

A node in a state-space tree is said to be promising if it corresponds to a partially

constructed solution that may still lead to a complete solution; otherwise, it is called

nonpromising.

Leaves represent either nonpromising dead ends or complete solutions found by the

algorithm.

GENERATION OF

STATE SPACE TREE:

- Maintain an array X to represent

all elements in the set.

- The value of Xi indicates whether

the weight Wi is included or not.

- Sum is initialized to 0 i.e., s=0.

- We have to check starting from the

first node.

- If S+X(k)=M then we print the

subset b’coz the sum is the required output.

- If the above condition is not

satisfied then we have to check S+X(k)+W(k+1)<=M. If so, we have to

generate the left sub tree. It means W(t) can be included so the sum will

be incremented and we have to check for the next k.

- After generating the left sub tree

we have to generate the right sub tree, for this we have to check

S+W(k+1)<=M.B’coz W(k) is omitted and W(k+1) has to be selected.

- Repeat the process and find all

the possible combinations of the subset.

Algorithm:

Algorithm

sumofsubset(s,k,r)

{

//generate the

left child. note s+w(k)<=M since Bk-1 is true.

X{k]=1;

If (S+W[k]=m)

then write(X[1:k]); // there is no recursive call here as

W[j]>0,1<=j<=n.

Else if

(S+W[k]+W[k+1]<=m) then sum of sub (S+W[k], k+1,r- W[k]);

//generate right

child and evaluate Bk.

If ((S+ r-

W[k]>=m)and(S+ W[k+1]<=m)) then

{

X{k]=0;

sum of sub (S, k+1, r- W[k]);

}

}

SUBSET-SUM PROBLEM

Subset-Sum Problem is finding a subset of a given set S = {s1,s2….sn} of n positive

integers whose sum is equal to a given positive integer d.

For example, for S = {1, 2, 5, 6, 8) and d = 9, there are two solutions: {1, 2, 6} and {1, 8}.

Of course, some instances of this problem may have no solutions.

It is convenient to sort the set‘s elements in increasing order. So we will assume that

s1 ≤ s2 ≤ ……. ≤ sn

- The state-space tree can be constructed as a binary tree as that in the following figure for the instances S = (3, 5, 6, 7) and d = 15.

- The root of the tree represents the starting point, with no decisions about the given elements made as yet.

- Its left and right children represent, respectively, inclusion and exclusion ofs1 in a set being sought.

- Similarly, going to the left from a node of the first level corresponds to inclusion of s2, while going to the right corresponds to its exclusion, and soon.

- Thus, a path from the root to a node on the ith level of the tree indicates which of the first i numbers have been included in the subsets represented by that node.

- We record the value of s‘ the sum of these numbers, in the node, Ifs is equal to d. we have a solution to the problem.

- We can either, report this result and stop or, if all the solutions need to he found, continue by backtracking to the node‘s parent.

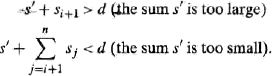

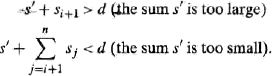

- If s‘ is not equal to d, we can terminate the node as non promising if either of the two inequalities holds:

HAMILTONIAN CIRCUIT PROBLEM

Procedure:

1. Define a solution vector

X(Xi……..Xn) where Xi represents the I th

visited vertex of the proposed cycle.

2. Create a cost adjacency matrix

for the given graph.

3. The solution array initialized

to all zeros except X(1)=1,b’coz the cycle should start at vertex ‘1’.

4.Now we have to find the second

vertex to be visited in the cycle.

5. The vertex from 1 to n are

included in the cycle one by one by checking 2 conditions,

1.There

should be a path from previous visited vertex to current vertex.

2.The current vertex must be

distinct and should not have been visited earlier.

6.When these two conditions are satisfied the current vertex is included in the

cycle, else the next vertex is tried.

7.When

the nth vertex is visited we have to check, is there any path from nth vertex

to first 8vertex. if no path, the go

back one step and after the previous visited node.

8.Repeat

the above steps to generate possible Hamiltonian cycle.

Algorithm:(Finding all Hamiltonian cycle)

Algorithm

Hamiltonian (k)

{

Loop

Next

value (k)

If (x (k)=0)

then return;

{

If k=n then

Print (x)

Else

Hamiltonian

(k+1);

End if

}

Repeat

}

Algorithm Nextvalue

(k)

{

Repeat

{

X [k]=(X [k]+1) mod (n+1); //next vertex

If (X [k]=0) then return;

If (G [X [k-1], X [k]]  0) then

0) then

{

For j=1 to k-1 do if (X [j]=X [k]) then

break;

// Check for distinction.

If (j=k) then //if true then the vertex is distinct.

If ((k<n) or ((k=n) and G [X [n], X [1]]

0)) then return;

0)) then return;

}

} Until (false);

}

8-QUEENS PROBLEM:

This 8 queens problem is to place n-queens in an ‘N*N’ matrix in

such a way that no two queens attack each otherwise no two queens should be in

the same row, column, diagonal.

Solution:

- The solution vector X (X1…Xn) represents a solution in which Xi is

the column of the th row

where I th queen is placed.

- First, we have to check no two queens are in same row.

- Second, we have to check no two queens are in same column.

- The function, which is used to check these two conditions, is [I, X

(j)], which gives position of the I th queen, where I represents the

row and X (j) represents the column position.

- Third, we have to check no two queens are in it diagonal.

- Consider two dimensional array A[1:n,1:n] in which we observe that every element on the same

diagonal that runs from upper left to lower right has the same value.

- Also, every element on the same diagonal that runs from lower right

to upper left has the same value.

- Suppose two queens are in same position (i,j) and (k,l) then two

queens lie on the same diagonal , if and only if |j-l|=|I-k|.

STEPS TO GENERATE THE SOLUTION:

- Initialize x array to zero and start by placing the first queen in

k=1 in the first row.

- To find the column position start from value 1 to n, where ‘n’ is

the no. Of columns or no. Of queens.

- If k=1 then x (k)=1.so (k,x(k)) will give the position of the k th

queen. Here we have to check whether there is any queen in the same column or

diagonal.

- For this considers the previous position, which had already, been

found out. Check whether

X (I)=X(k) for column

|X(i)-X(k)|=(I-k) for the same diagonal.

- If any one of the conditions is true then return false indicating

that k th queen can’t be placed in position X (k).

- For not possible condition increment X (k) value by one and

precede d until the position is found.

- If the position X (k)

n and k=n then the solution

is generated completely.

n and k=n then the solution

is generated completely.

- If k<n, then increment the ‘k’ value and find position of the

next queen.

- If the position X (k)>n then k th queen cannot be

placed as the size of the matrix is ‘N*N’.

- So decrements the ‘k’ value by one i.e. we have to back track and

after the position of the previous queen.

Algorithm:

Algorithm place

(k,I)

//return true if

a queen can be placed in k th row and I th column.

otherwise it returns //

//false .X[] is

a global array whose first k-1 values have been set. Abs® returns the

//absolute value of r.

{

For j=1 to k-1 do

If ((X [j]=I) //two in same column.

Or (abs (X [j]-I)=Abs (j-k)))

Then return

false;

Return true;

}

Algorithm Nqueen (k,n)

//using

backtracking it prints all possible positions of n queens in ‘n*n’ chessboard.

So

//that they are non-tracking.

{

For I=1 to n do

{

If place (k,I) then

{

X [k]=I;

If (k=n) then write (X

[1:n]);

Else nquenns(k+1,n) ;

}

}

}